bagging machine learning explained

What are the pitfalls with bagging algorithms. Bagging explained step by step along with its math.

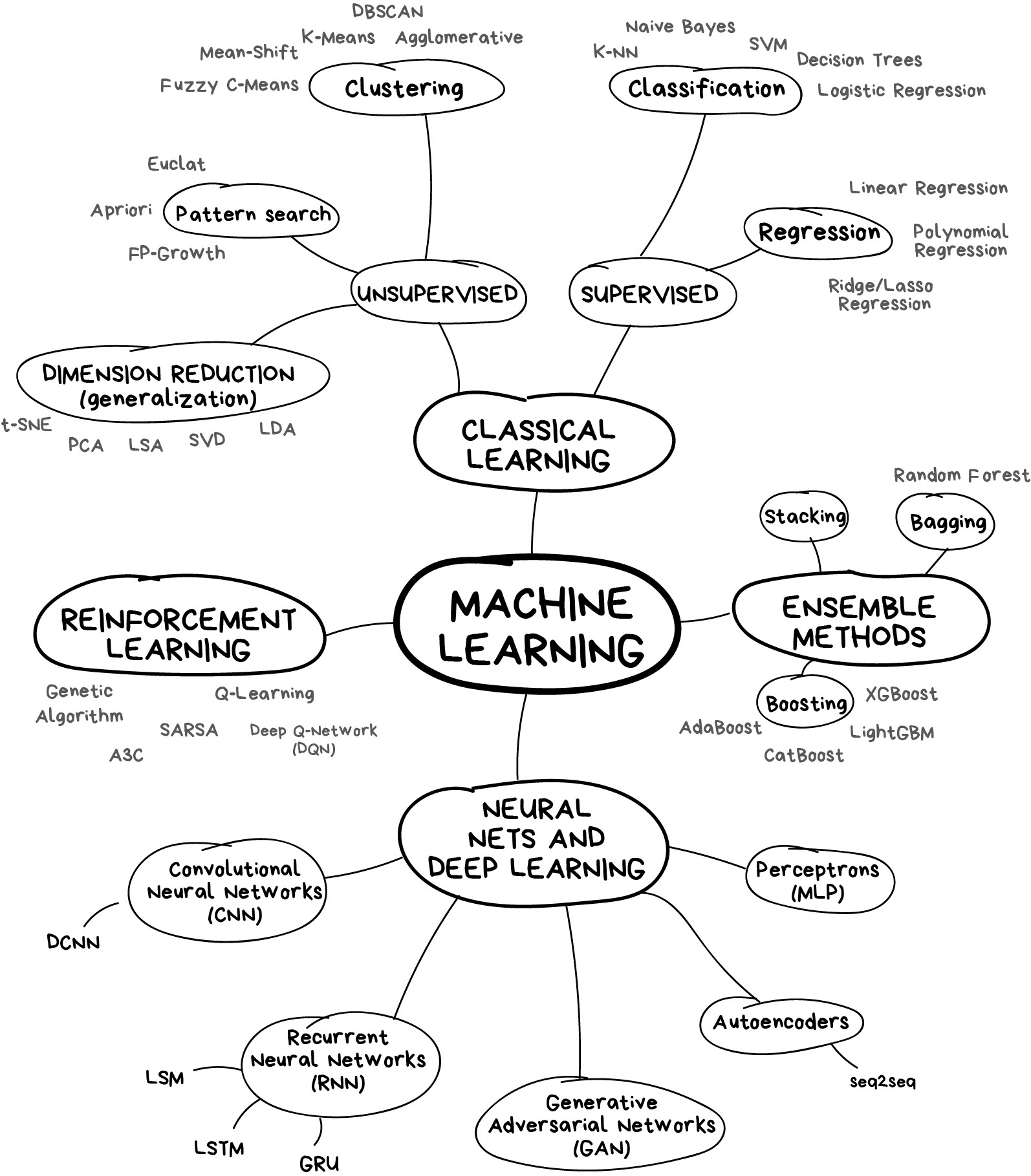

Ensemble Methods In Machine Learning 4 Types Of Ensemble Methods

Join the MathsGee Science Technology Innovation Forum where you get study and financial support for success from our community.

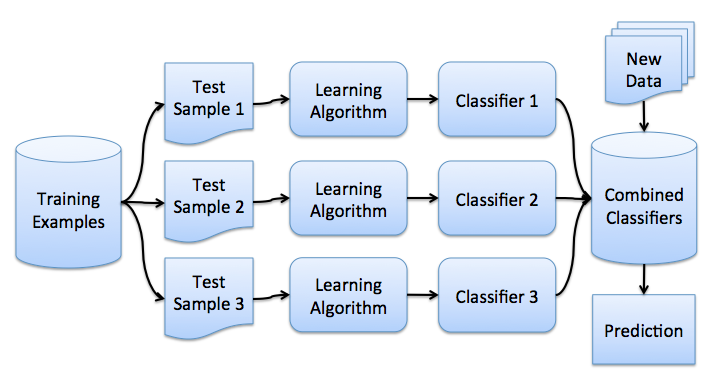

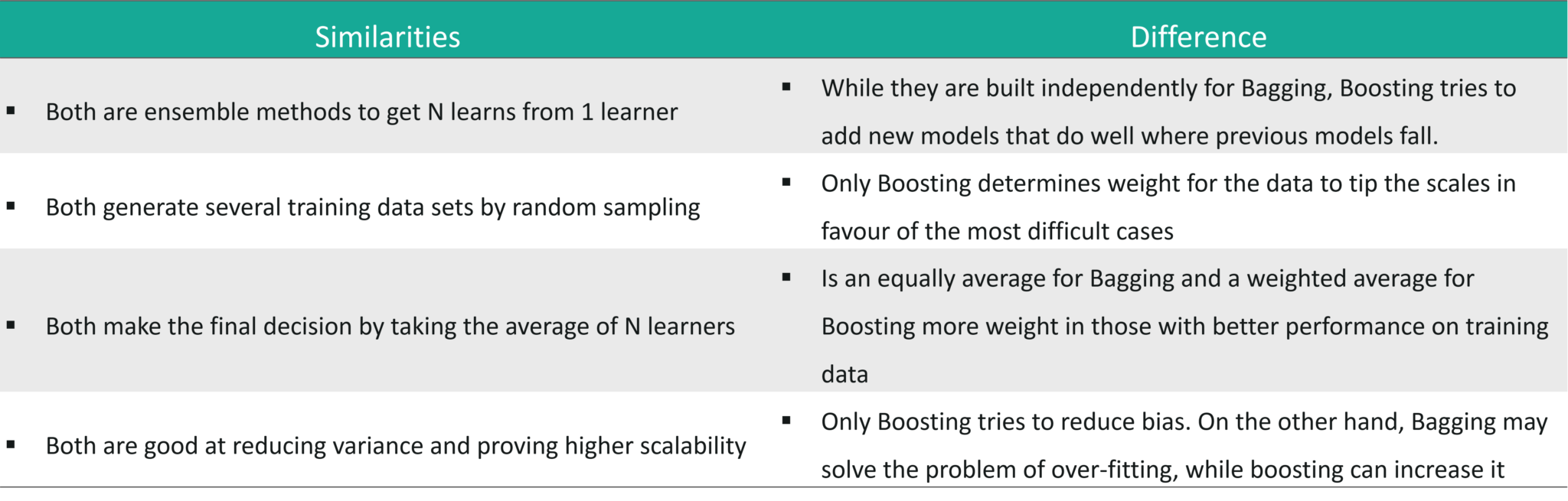

. Bagging which is also known as bootstrap aggregating sits on top of the majority voting principle. Bagging is the application of Bootstrap procedure to a high variance machine Learning algorithms usually decision trees. In the Bagging and Boosting algorithms a single base learning algorithm is used.

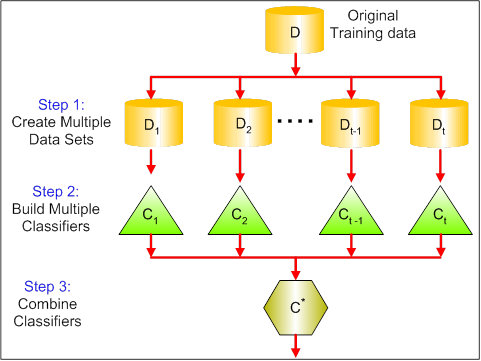

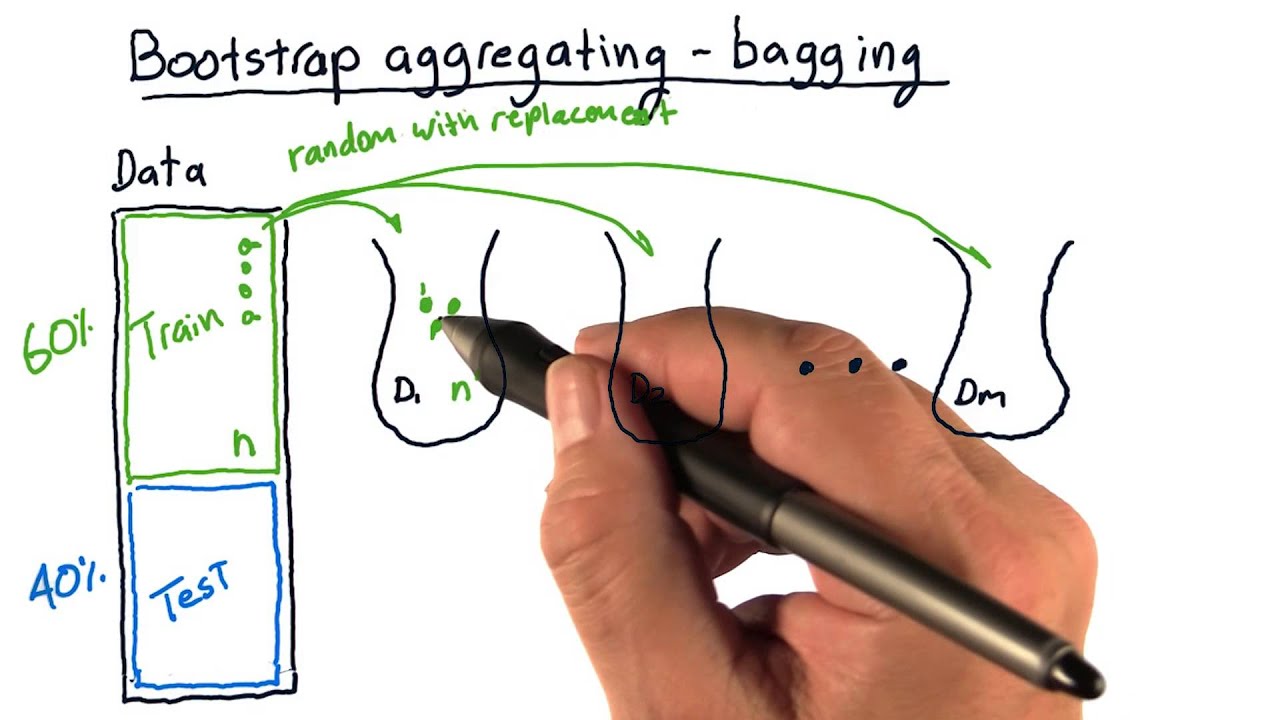

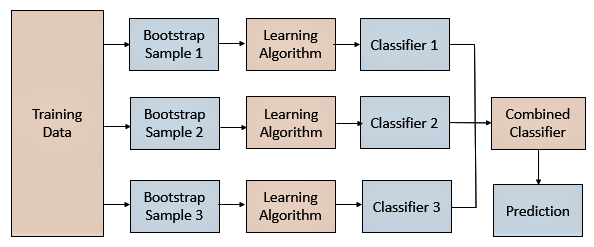

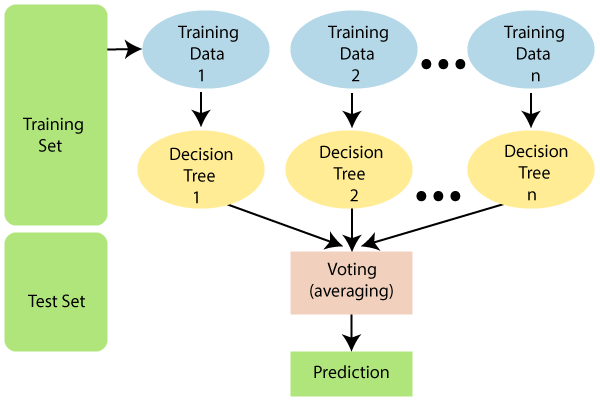

It does this by taking random subsets of an original dataset with replacement and fits either a. Bagging consists in fitting several base models on different bootstrap samples and build an ensemble model that average the results of these weak learners. Implementation Steps of Bagging.

Ensemble machine learning can be mainly categorized into bagging and boosting. Why Bagging is important. What is Bagging.

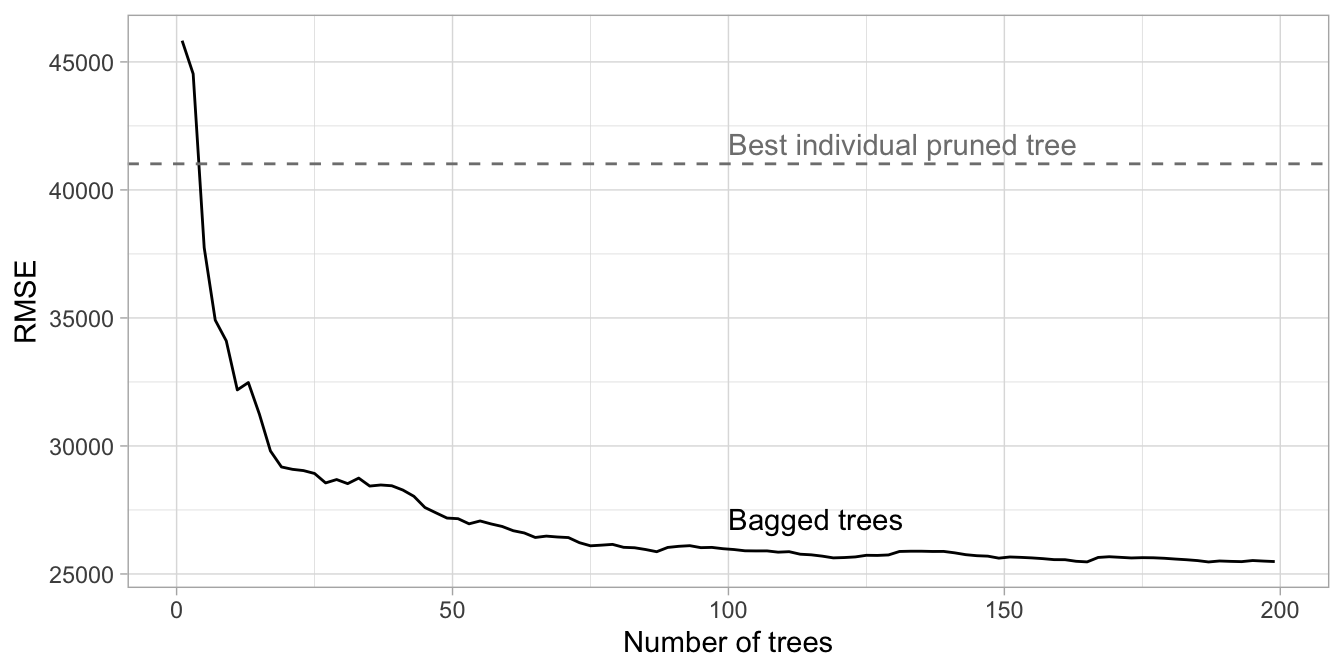

Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. The bagging technique is useful for both regression and statistical classification. The samples are bootstrapped each time when the model.

In 1996 Leo Breiman PDF 829 KB link resides outside IBM introduced the bagging algorithm which has three basic steps. This is Ensembles Technique - P. Bagging from bootstrap aggregating a machine learning ensemble meta-algorithm meant to increase the stability and accuracy of machine learning algorithms used in.

Decision trees have a lot of similarity and co-relation in their. Bagging aims to improve the accuracy and performance of machine learning algorithms. Bagging also known as bootstrap aggregating is the process in which multiple models of the same learning algorithm are trained with bootstrapped samples.

Multiple subsets are created from the original data set with equal tuples selecting observations with replacement. Explain bagging in machine learning. What Is Bagging.

Lets assume we have a sample dataset of 1000. The reason behind this is that we will have homogeneous weak learners at hand which will be.

Chapter 10 Bagging Hands On Machine Learning With R

Bagging And Boosting Machine Learning Demystified

Machine Learning For Everyone In Simple Words With Real World Examples Yes Again Vas3k Com

5 Easy Questions On Ensemble Modeling Everyone Should Know

Introduction To Bagging And Ensemble Methods Paperspace Blog

Python Examples Of Popular Machine Learning Algorithms With Interactive Jupyter Demos And Math Being Explained R Learnmachinelearning

Machine Learning What Is The Difference Between Bagging And Random Forest If Only One Explanatory Variable Is Used Cross Validated

Introduction To Random Forest In Machine Learning Engineering Education Enged Program Section

Top Machine Learning Interview Questions And Answers For 2022

Bootstrap Aggregating Bagging Youtube

What Is Bagging In Machine Learning Quora

Bagging And Random Forest Ensemble Algorithms For Machine Learning

What Is Bagging In Machine Learning Ensemble Learning Youtube

Bagging Bootstrap Aggregation Overview How It Works Advantages

Ensemble Methods Techniques In Machine Learning Bagging Boosting Random Forest Gbdt Xg Boost Stacking Light Gbm Catboost Analytics Vidhya

Machine Learning Random Forest Algorithm Javatpoint

Learn Ensemble Learning Algorithms Machine Learning Jc Chouinard

What Is Bagging In Machine Learning And How To Perform Bagging